Advertisement

Breast Tumors, Like Guns In Luggage, Missed Because They’re Rare

We already knew this about guns and knives hidden in baggage. Now it seems the same important insight applies to cancers hidden in breasts: When the target of a visual search — like a weapon or a tumor — occurs only rarely, we’re far likelier to miss it than if it were much more common.

Jeremy Wolfe, director of the Visual Attention Lab at Brigham and Women’s Hospital, uses this pithy phrase for the problem: “If you don’t find it often, you often don’t find it.”

And a problem it is, from airport security to pap smears. Growing research suggests that because some of the perils we most want to seek and destroy are extremely rare, we're naturally ill-suited to the task.

A cognitive scientist and vision expert, Wolfe began applying his lab’s work to airport security in the years after 9/11. Now he has just presented real-world findings on breast cancer at the annual convention of the Radiological Society of North America, a gathering of tens of thousands of medical scanning professionals.

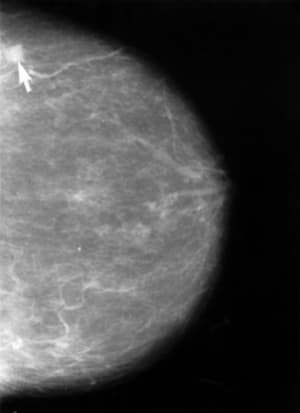

Typically, mammography turns up three or four cases of breast cancer for every 1,000 scans, but misses 20-30% of tumors, Wolfe said. His central finding: As many as half of those misses could be the result of the “behavioral effects of searching for something very rare.”

First, to clarify the point, using an example from Wolfe’s convention talk based on lab experiments:

Imagine you have X-rays of 20 bags with guns and knives in them. Mix them into a stack of 40 X-rays in total, so the “prevalence” of weapons is 50%, one in two. If you were a typical scan-checker in Wolfe’s experiment, you would fail to catch only four or so of those 20 hidden weapons.

Now imagine those same weapon-laden 20 suitcases are mixed in a pile of 1,000 bags, so the prevalence of weapons is a mere 2%. It’s the same 20 bags, but your “miss” rate more than doubles, from missing perhaps four weapons to perhaps eight or nine.

Why? These searches are hard tasks, exhausting for our fallible human eyes and brains. Plus, we have a built-in hesitancy about saying we have found something rare. And when targets are rare, we tend to give up more quickly.

Go look for a zebra

Say I tell you to go out to the streets of Boston and look for a zebra, Wolfe said.

You probably don’t want to do that for very long, and you’re unlikely to think “Bingo!” if you see something stripey out of the corner of your eye. Now say we’re on safari in Africa and I tell you to look for a zebra; you’ll spend all your time looking and you might think “There’s one!” when you see anything stripey.

Also, Wolfe explained, when we try to interpret ambiguous images, we adjust the threshold in our mind for saying “Yes, this is what I am looking for” — a zebra, a weapon, a tumor — or “No, it isn’t”. If almost everything is a “no,” we tend to move that threshold toward the “no” side.

“Those are very ingrained behaviors,” he said. “Those rules operate automatically whether you want them to or not”

And they operate in many different domains: He has just published a paper on pap smears finding a very similar problem in the search for cervical cancer.

A felony-free experiment

Though it makes intuitive sense that our brains would have the same difficulties detecting rare tumors as rare weapons, getting to those real-world breast cancer findings was far from simple for Wolfe, Robyn Birdwell, head of women’s imaging at the Brigham, and Karla Evans, a postdoctoral fellow in Wolfe’s lab.

The tricky challenge was to slip the phony cases that were part of their experiment undetectably into the normal work-week of radiologists at the Brigham.

“Our original method would have turned out to be a felony,” Dr. Wolfe said, “because you can’t mess with medical records that are being reported to the state. That would have been very bad. It’s basically a medical informatics challenge. It took a lot of help from our friends in Radiology to figure out how to actually get this done.”

But figure it out they did, and they began to slide phony test cases — old scans whose diagnoses were already known — into the radiologists' normal work-flow: 100 cases, 50 with cancer and 50 without, over nine months.

[module align="right" width="half" type="pull-quote"]'Potentially half the errors in an important medical screening test could be due to the way the human mind is put together.'[/module]

The radiologists knew about the experiment, but not which scans were part of it. (Unless they detected cancer in a phony scan; then they’d be informed immediately so they didn’t waste time trying urgently to alert the fake patient’s doctor.)

In a second part of the experiment, radiologists read the same 100 cases, with their 50% prevalence of cancer, but all at one time.

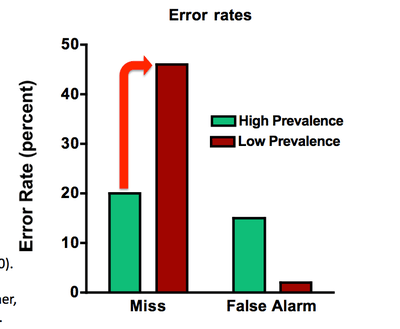

What happened? The radiologists missed just 12% of the cancers when they were reading all 100 cases in one sitting. But in the real-world, clinical situation of low prevalence, they missed far more: 30%.

It is that difference between the two error rates that is important, Wolfe emphasized. “The 30% error rate in the clinic should not be taken as a measure of the performance of Brigham radiologists," he said, because the 100 cases were not chosen randomly and were skewed to include many difficult cases.

The important point is that the same cancers that were missed in the clinic were found when the prevalence was 50%, he said. "This is a case where potentially half the errors in an important medical screening test could be due to the way the human mind is put together. It is not due to any misbehavior by our expert readers. Not due to some problem with the equipment. Not due to some bad, evil government policies. This is fundamental cognitive science, interacting with an important real-world problem.”

Real world remedies

So what is to be done? Sadly, we can’t just automate our way out of this problem; computerized detection is just not good enough at this point.

How about routinely sticking a bunch of phony cancer cases into radiologists’ stacks of scans, to make “cancer” less rare?

Nice idea, Wolfe said, except that radiologists are already reading as many cases as they can and would not take kindly to doubling of their burden, especially for “fake” patients.

Airport security findings brought a similar dilemma: Yes, you could make sure that TSA screeners turned up more weapons by planting more into the flow, but “the line that now goes halfway out into terminal C would go all the way into terminal C. You would not be happy about that.”

Booster shots

So is there a more practical remedy?

There are methods that improve performance in the lab, and Wolfe hopes to try them out in real-world settings, he said.

For example, there’s a so-called “booster shot:” Before a person begins a needle-in-a-haystack task like breast cancer screening, they might undergo a quick warm up for several minutes. They would be asked to find targets that were present 50% of the time and then would get good feedback on whether they were right.

“In the lab, at least, that improves performance,” Wolfe said. “You’ll have to call back in a year or two to find out whether that makes a difference clinically, because we’re just beginning to fire up that experiment.”

This program aired on December 2, 2011. The audio for this program is not available.