Advertisement

Blind Opera Superstar Andrea Bocelli Seeks High-Tech Vision At MIT

What's a young blind Italian student to do when his beautiful blond crush, Mary, approaches?

Well, if a slew of new assistive technologies now being developed at MIT and Northeastern come to fruition, the intimate interaction might unfold like this: the blind student, wearing a cutting-edge device — a smart jacket, for instance — equipped to communicate a complex array of information privately to a blind user, will be able to sense Mary's presence, facial expressions and body language, chat intelligently with her about literature and move in to squeeze her hand when a rival suitor approaches.

The characters here are fictional, of course. But the overarching ambitions of this research, funded by the blind Italian opera superstar Andrea Bocelli's foundation, are both intimate and far-reaching. "The idea was a huge bet," Bocelli said today. He was speaking through a translator at a workshop at MIT to introduce the array of new technologies to empower blind people to live, study and work more independently. "To create a tool, a device, that would basically substitute itself for the eyes." He characterized the research as going from the "impossible to the possible."

The genesis of the Bocelli-MIT venture was a post-concert meeting in Boston several years ago, Bocelli said. He brainstormed with several MIT professors to find out what kind of technology for the blind would "be possible." Since then, a collaborative team of cross-disciplinary researchers have developed prototypes that may someday be able to deliver critical data to the blind: everything from dynamic information about safe walking terrain and hazards, to enhancing social interactions in real-time through wearable devices or a vibrating watch with a high-resolution tactile display that can deliver important information through the skin.

"I have to be honest, the idea of this project was not born of my own needs — I am in a privileged situation," with an entourage of helpers all around, said Bocelli, who grew up with low vision and then became completely blind in childhood following a sports-related accident. But "there are many people, some of them my friends, that are living alone in a city, and they have the issue of going to work on their own, going grocery shopping, locating the items on the shelves...The issue is really living on one's own." Speaking at a news conference, Bocelli conceded that one day, he might use the technology himself: "Of course, when it will come to fruition, it will be helpful to me as well — because the main problem is that humanity has people who are never happy with what they have. This technology will be helpful first for people who are on their own, but then it will come in handy for people like me, who want to be on their own some times."

Specifically, The Andrea Bocelli Foundation says it's given about $500,000 to fund researchers at MIT, and Northeastern to develop these technologies.

Advertisement

A central endeavor is called The Fifth Sense Project (seeking to replace the missing sense of sight)

and involves a team of researchers led by Seth Teller, a professor of computer science and engineering in the EECS department, and a principal investigator) in the Computer Science and Artificial Intelligence Lab. (For full disclosure, see below.)

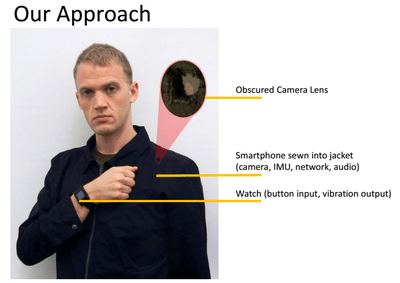

The team is developing wearable devices for blind and low-vision people that "combine sensing, computation and interaction to provide the wearer with timely, task-appropriate information about the surroundings — the kind of information that sighted people get from their visual systems, typically without conscious effort," Teller said.

One of Teller's graduate students, David Hayden, is working on what he calls a "socially acceptable" wearable acquaintance recognizer, or assistant, that provides feedback about nearby people through audio, tactile and other modes. Hayden explained: "It's continuously watching for anyone you know. The device will try to identify the people in your life. A lot of great conversations start at the water cooler, but blind people can't initiate them."

Another project would identify and read text — say, on appliances, which have become less accessible to blind people as they’ve moved from knobs to smartscreens.

The Fifth Sense Project, still in its early phase, focuses on several areas:

1. Safe Mobility And Navigation

Imagine walking around the city of Boston with your eyes closed. Kind of scary, right? This technology tries to get at some really critical questions about safely getting around in the world, for instance, where are the appropriate walking surfaces and how can blind people avoid tripping and collision hazards? Ultimately the device might be able to answer more specific questions, like: Where am I? Which way is it to my destination? When is the next turn, landmark or other salient environmental aspect coming up? Do my surroundings include text, and if so what is it? Where is the kiosk, concierge desk, elevator lobby, water fountain, for instance, that I seek? What transit options (bus, taxi, train, etc.) are nearby or arriving?

2. Detecting And Identifying People

Social interactions are, obviously, essential for a full life.

For the blind, it's not so easy to initiate these kinds of interactions, so this technology aims to, for example, let users know when friends, strangers or acquaintances are approaching (like fictional gorgeous Mary on the Italian campus) and who they are; what is their body stance and body language; what are they wearing?

3. How To Convey Information Non-Visually Through Tactile And Aural Interfaces

The key question here is: How can the system effectively engage in spoken dialogue with the blind or visually impaired person so that he or she can specify goals or needs or desires?

Obviously, there are already technologies out there to assist the blind. The American Federation for the Blind identifies two categories: general items, like computers, smartphones and GPS devices, and more personally tailored devices, including "everything from screen readers for blind individuals or screen magnifiers for low-vision computer users, devices for reading and writing with low vision, to braille watches and braille printers."

Others have personalized devices even further, like vizwiz's iPhone app that allows blind people to receive quick answers about their surroundings from sighted web volunteers who answer questions submitted over the Internet.

But the MIT team's wearable device project is more ambitious: eventually the technology should work in real-time, they say, and support both mobility and social functions without sacrificing privacy.

"Why develop assistive technology for blind people?" Teller said in prepared remarks. "The unemployment rate for blind people is estimated at 75 percent in the United States, and approaches 100 percent in many parts of the developing world. Access to education is a key barrier; many blind and visually impaired children are simply left behind, unable to access the materials needed for their studies, especially in the areas of science, engineering and technoogy..."

Here's more from Teller on why this research is important:

Think about basic mobility, the ability to move safely and independently from point A to point B, which is a key aspect of adult life and employment. Yet blind people are unaware of imminent obstacles and trip hazards. Even with a long cane they must move slowly through unfamiliar environments, or accept sighted assistance, to stay safe.

Or consider social information, the awareness of what other people are nearby and what they are doing. This is again a key aspect of a participatory life, to which blind people have fundamentally less access than sighted people. Here blindness changes the basic nature of social interaction, from proactive to reactive, from fluid to halting, from independent to dependent.

Finally consider environmental information, awareness of the text and symbols that are everywhere in our surroundings, used to find one's way, to locate personal objects, to shop, to identify resources, to operate electronics and appliances, to accomplish the myriad tasks involved in living an independent life. Again, this kind of environmental information, with rare exceptions, is simply not available to blind people. And the problem is getting worse as more and more appliances are designed with visual touchscreens inaccessible to blind people.

So at its heart, we are tackling a problem of access: how can we develop machines that can capture and interpret this kind of information and relay it to a blind user, rapidly enough to be useful in a dynamic world? This problem is clearly deeply compelling from a technical perspective, as it involves the nature of visual information in natural and built environments, and algorithms for rapidly sensing, interpreting and delivering such information. But it's also a problem of compelling social interest, as its solution will level the playing field for tens to hundreds of millions of people around the world not only in education and employment, but in social and personal pursuits...

In the United States, for example, despite the great number of congenitally blind and visually impaired people, and the growing number of people losing vision through disease or normal aging processes, there is proportionally little funding available to support assistive technology development for visual function.

"One might expect that with the wars of the last decade, and the many thousands of veterans returning home without vision, major public resources would have been allocated to alleviating their disability, but this is simply not the case...

Bocelli, the renowned, Pisa-born tenor who, according to news reports, is "the biggest-selling artist in the history of classical music" with nearly 100 million albums sold, said he's pleased to have sparked this flurry of research. Low-key, wearing dark sunglasses and encircled by his Italian minders, Bocelli was asked if he'd be sporting a wearable device or vibrating watch someday in the future. He paused for a second, shrugged and, reverting to English replied: "Why not?"

(Here's the full disclosure: Seth Teller is my husband. I normally never write about his work,but Bocelli's star power made it a news story today. And no pretense of objectivity here: I think what he and his colleagues are attempting is very cool.)

This program aired on December 6, 2013. The audio for this program is not available.