Advertisement

Commentary

What My 90-Year-Old Mom Taught Me About The Future Of AI In Health Care

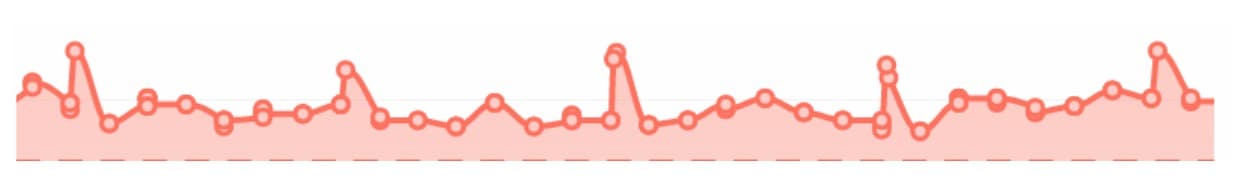

Quick: What is the hilly profile in the red figure below?

No, it’s not my fitness tracker’s daily step count, but you’re close.

It’s a set of daily weights, as measured by an electronic scale over a period of several weeks. More specifically, the daily weights of my 90-year-old mother, who continues to live in her own apartment despite all my offers. "I outlived Hitler and Stalin,” she says. “I can take care of myself."

She can, in fact, take care of herself, but I’ve found over recent months that she can take care of herself far better with some digital help. And that experience, though only a single case, persuades me that recent spectacular gains in artificial intelligence could bode far better for our health than studies to date suggest. But also that caring, common-sensical humans will not be replaced by AI any time soon.

My mother has moderate heart dysfunction, and her physician has her on all the right medications, including a daily dose of Lasix, a "water pill" that makes you urinate more and thereby eliminate some of the salt in your blood. Yet last year, over several weeks, her legs became increasingly swollen with fluid, to the point that it oozed out of her skin. Her shins looked as if they were covered with tears. She was also in quite a bit of discomfort.

Her physician sent her to the emergency room, where an ultrasound showed that her heart was enlarged and pumping less effectively than it should. She landed in the hospital for a week, where the most important treatment she received was intravenous Lasix, a far more potent form than her usual pills.

By the time she left the hospital, her legs had not returned to normal but were visibly thinner. As I would have said as a callous medical student, she had been effectively "dried out." She was also considerably weaker from spending a week in a hospital bed, but regained her strength with the help of a physical therapist.

Advertisement

Six months later, a similar scenario played out, but worse. This time, she had to go to a rehabilitation facility after her hospital stay because she was too weak to care for herself or even to walk without help. I suppose if you survive malaria, starvation and typhus as a child, you must be made of tough protoplasm. After two weeks of intensive rehab, she was back at her apartment again.

But two hospital stays in such a short period concerned me. So I decided to try something that in my day job as a researcher in biomedical informatics — the use of computing to advance medicine -- I knew had been tried before by many health systems with highly variable and often disappointing results. Nonetheless, I was determined to make it work.

The Plan

The plan was to have her watch her weight daily, and every time there was any sign of increased fluid, to recommend an extra dose — an extra pill — of Lasix, to restore her fluid balance.

This type of thinking has informed doctors for decades. Outside the hospital, it has worked well for some, but multiple trials, several involving computerized alerts every week to change water-pill dosage, have not conclusively shown benefit.

On the other hand, a friend’s father, a physician, had defied the odds and lived for decades with severe heart failure by weighing himself daily and adjusting his medications accordingly. That was not a trial, true, but it seemed remarkable, and I wanted to emulate it.

Before I go further, let me confess that when I tell this story to my colleagues, especially those trained in internal medicine or cardiology, their responses range from outright alarm to pursed-lip disapproval. It only gets worse when I remind them that I trained in pediatrics. So let’s be clear: Don't try this at home, kids. And I would emphasize that I did not remove my mother from her regular internist’s care. He remained as a safety net, and I communicated with him copiously.

Back to my story: The challenge was to manage my mother within the constraints of my demanding schedule. I decided the first, most crucial task was to get accurate weights sent to me promptly so that I could act on them. I decided to purchase an internet-enabled scale from Fitbit that allowed me to check my mother's weight via Fitbit's web application.

I asked my mother to weigh herself every morning before eating. In the first few weeks, if she forgot to weigh herself I could see that and would call her to nudge her. Within a month, I never had to remind her anymore. Instead, she’d call me to see what I thought of her weight.

Now for the easy part, how to control fluid balance. Heart physiology is complex, and many have tried with only partial success to devise computational models that can predict how it will change with perturbations from diet, medications, disease or exercise. But I boiled it down to one question: How to determine whether she should take an extra Lasix on a given day?

I'll call my plan an algorithm, so it will sound authoritative:

1. If her weight increases by more than 1 pound in one day, recommend one extra Lasix pill.

2. If her weight increases by only 1 pound, then wait to see if it increases by another pound in the following two days, in which case, recommend an extra Lasix pill. And if she took the extra pill, then:

3. If the weight does not return to normal by the second day after the extra dose, then give one additional dose the third day. If on the third day the weight has not returned to baseline, go visit her in her apartment and see if her legs are swollen or if her breathing has changed.

This third part of the algorithm I labeled "visiting nurse." If I saw anything that worried me — swollen legs, faster breathing, poor skin color — I would call her doctor and ask him to evaluate her. At this point, we’d be back in the conventional medical management world, but I’d have a lot more useful information to share with her doctor than I would otherwise.

The algorithm also included sleuthing out the cause of the weight gain. On the phone I’d go over what she had eaten. Often the culprit was a source of additional salt. Only after reviewing a typical salad and pasta dinner would I learn that she’d enjoyed a dozen delicious salt-loaded crackers.

Just having these "debugging" phone calls after a weight gain caused these diet-borne risks to be eliminated rapidly. They also provided my mother with a very concrete sense of which foods to avoid.

So What About AI?

Now back to the graph I shared above. It shows a few weeks of what has gone on for over a year. I managed each of those peaks according to the algorithm. Through the miracle of the internet and smartphones, I was able to run the algorithm even when I was in a distant part of the globe to give a talk or on a family vacation.

Best of all, my mother hasn't even come close to needing to go back to the hospital. Her legs remain completely unswollen. Also, I never called her doctor about persistent fluid gain because that part of the algorithm was never triggered.

Moreover, after a few months, my mother started calling me to let me know that she had already implemented the algorithm for that day, because she’d gotten tired of waiting for me to call her with my recommendation.

So what about AI? If computers can now win Texas hold 'em poker with imperfect information and bluffing human beings, surely they can manage patients like my mother?

I’m not so sure.

A frail, elderly patient’s health may be influenced by single or multiple perturbations that span the full spectrum of human experience: How much salt was in yesterday’s food, the appearance of a skin infection on a leg, change in thyroid hormone levels, increased fluid loss due to apartment heat after an air conditioner failure, sad news causing mood changes causing decreased exercise.

That is only a partial list of the challenges that my mother has overcome in the past year.

And though it may seem straightforward, managing an outpatient with heart failure is far more difficult than the apparently more complex tasks that have been featured in the success of "deep learning": finding cancer cells in a pathology slide, or signs of diabetic disease in a photograph of a retina.

Even more challenging: How does a computer program obtain trust and persuasive powers so that skeptics like my mother will comply with recommendations? What discussions, diagrams, pressures or incentives will be sufficient to convince someone who may not be feeling well at all to change a behavior, a medication or diet?

These skills are hard to come by in humans, let alone computers.

So should we give up? On the contrary. Let’s not fall into the trap of "the Superhuman Human Fallacy" — the demand that computers perform better than even the best of humans. A more useful comparison is to the way humans actually perform.

Even with imperfect hardware and simplistic algorithms, my mother's doing better than before, when weeks would pass between physician visits and treatment adjustments. I’m confident she and many other patients can do better still, but only if we shore up the two sides of the clinical compact.

On the one side, organized medicine has to change its practice so that it can ingest the day-to-day or even minute-to-minute measurements made of our fast-growing chronically ill and aging population, and transduce these data into timely treatment. But without thoughtful and broad application of AI techniques into the process of health care, our already struggling and stressed health care workforce will simply be not able to meet this challenge.

And on the other side, AI cannot replace family and friends as guardians of health — not now and perhaps not ever.

AI may be good at chess and Go, and at developing expertise once reserved for doctors in arcane areas such as reading X-rays. But AI does not do well at understanding the wide world, at picking up mood or subtle signs of distress, at convincing a resistant human to listen to the doctor. We don't need AI for that; we need a caring village.

Dr. Isaac Kohane is the inaugural chair of the Department of Biomedical Informatics at Harvard Medical School.