Advertisement

Commentary

The Manipulated Pelosi Video: Why We Embrace Fiction Over Fact

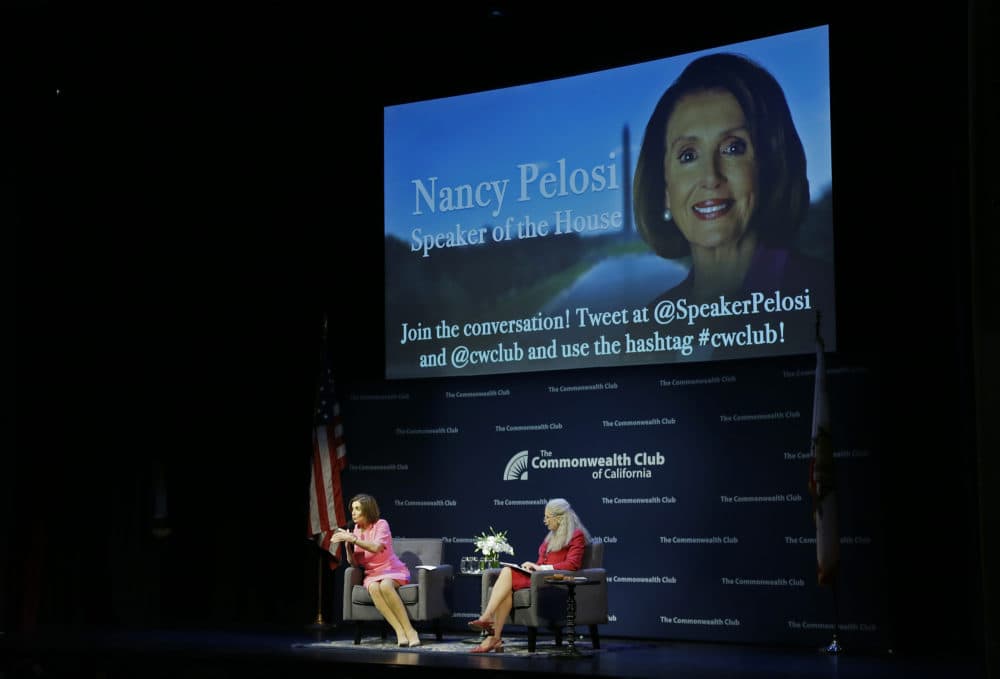

Last week, it was hard to avoid the video of Nancy Pelosi that had been slowed down to make her sound drunk, then tweeted by presidential lawyer Rudy Giuliani and shared widely on Fox News and on social media. In the face of an expert consensus that the video had been manipulated, YouTube took it down. Not so Twitter — who has refused to comment on their inaction — or Facebook. And while much of the important debate has focused on whether this video should be removed from social media sites, not enough has addressed how and why we are so willing to embrace falsehoods over fact.

In an interview with Anderson Cooper, Monika Bickert, Facebook VP for Product Policy and Counterterrorism, tried to explain why the company hadn’t taken down the video. She pointed out that Facebook employs over 50 fact-checking organizations around the world. She argued that when people advocate violence or make other direct threats to people’s safety, those posts are removed. She asserted that Facebook had removed over 3 billion fake accounts since January, and (in a policy nuance whose significance was known only to her), said that if the fake video had been posted by a fake account rather than a real person, it would have been taken down. But when Cooper pressed her to explain why Facebook would remove fake accounts but not fake videos, her response was disingenuous at best.

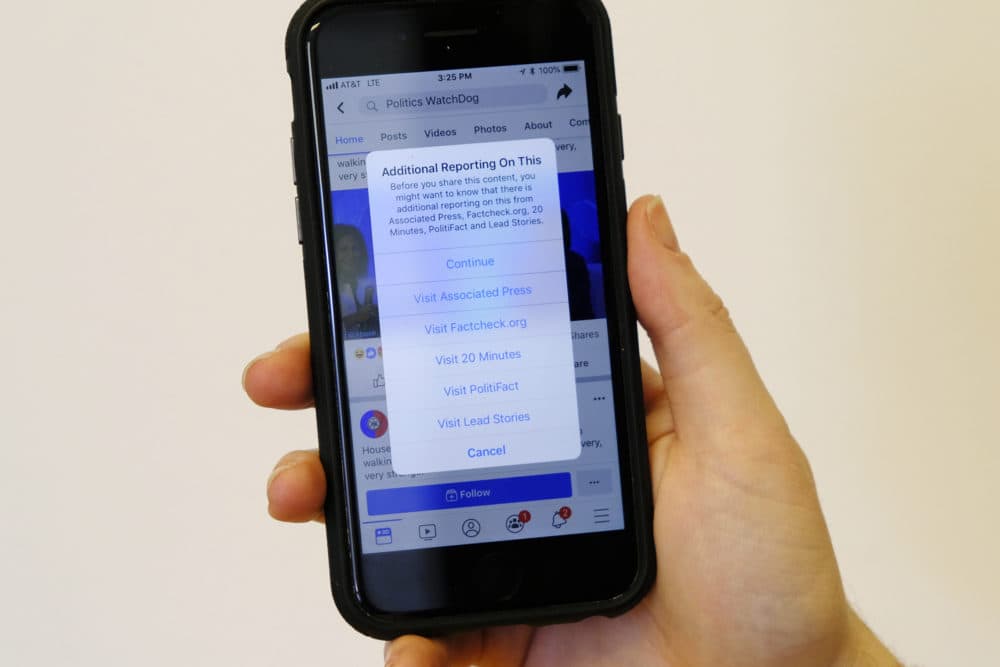

“We dramatically reduce the visibility of that content and we let people know it’s false so that they can make an informed choice,” she (falsely) claimed. In fact, Facebook users who try to share the video now see a pop-up telling them that “there is additional reporting on this available" from PolitiFact, 20 minutes, Factcheck.org, Lead Stories, and Associated Press.

Is that better than nothing? Sure. But are most people, let alone the people most inclined to think that Nancy Pelosi is an inebriated or demented incompetent, likely to go to any of those sites looking for that “additional reporting”? Of course not.

But what’s more disturbing, and certainly not unique to Facebook, is the worldview represented when she declared, “We think it’s important for people to make their own informed choice about what to believe … ” In essence, she is saying: We’ll post videos we know are manipulated to mislead and we’ll let viewers choose whether to believe the truth or the lie.

The problem with this approach is that as the historian and "Sapiens" author Yuval Noah Harari argues in a recent New York Times op-ed, humans are uniquely predisposed to create and believe in lies. In fact, doing so can be socially useful, as subscribing to shared myths enables cooperation on a large scale.

“… [L]arge-scale cooperation depends on believing common stories,” he explains. “But these stories need not be true. You can unite millions of people by making them believe in completely fictional stories about God, about race or about economics.” Or about Nancy Pelosi.

Indeed, Harar maintains that fiction tends to be more powerful than truth in uniting subgroups of people. And that is, after all, the overarching objective of most political communications.

If the goal of a story is to help one tribe distinguish itself from another, a made-up one will be more differentiating than a truth. For example, we can all agree Nancy Pelosi is two heartbeats away from the presidency; but the group that argues she can’t “put a subject in front of a predicate in the same sentence” (Fox) differentiates itself and its followers.

Advertisement

[T]they perpetuate the notion that truth is whatever we choose to believe.

And, as Donald Trump has learned from Infowars’ Alex Jones, if your goal is to use stories to both accrue power and generate demonstrations of loyalty, the more outrageous the story, the better. Almost everyone could have agreed on the fact that Hillary Clinton had connections in high places. But only the hardest core of Trump’s and Breitbart’s base would publicly subscribe to the notion that she operated a sex trafficking ring in the basement of a pizza parlor. And once having done so, they are unlikely to turn back. Instead, they’ll double-down on their loyalty.

And therein lies the danger of massive news distribution systems like Twitter and Facebook disseminating fictions alongside facts. In doing so, they perpetuate the notion that truth is whatever we choose to believe.

But pointing our fingers at the distributors of lies will not mitigate the dangers of gullibility. We also have to look inward. As humans, we are (to quote noted behavioral economist, Dan Ariely) “predictably irrational.” We are driven by cognitive biases that lead us to more easily remember ideas, experiences, and beliefs that match our own and to favor those who we perceive as being most similar to us. Our logic is often overcome by our hunger for consistency and validation. And all of these emotional needs and cognitive biases often combine to act against our own best interests.

We may not be able to change the policies of Facebook or Twitter. But if we are to make sound judgments and act for both our individual and collective good, we have to learn to live with ambiguity, with the cognitive dissonance evoked when reality doesn’t conform to our beliefs. And then we have to act on what’s real.