A First Pass At Interactive Live Radio

One thing we like to do in public radio is tease a feature story, or ongoing series, the night before it airs. Something like this:

It's a mental bookmark for our hardcore, round-the-clock listeners. It builds buzz and awareness around these fantastic feature stories.

But what about the more casual listener? These promos represent a brief, fleeting opportunity to engage them. What if they don't know what Morning Edition is, let alone when it airs? What if they really are quite interested in the story, but are busy all morning? Best-case scenario: they remember a key word or phrase, and search for it on our site a day or two later.

But we should strive to do better. We live in an extremely on-demand world, certainly when it comes to entertainment and even when it comes to news. We need to do more to adapt to technology, and meet more of the audience — as the cliche goes — where they are.

What if, instead, you could actually talk to your radio in that moment and say: "That sounds really interesting, I want to hear that right now."

Project CITRUS has tried to achieve that goal, as well as experiment with a news-focused voice skill. We started with "Dying on the Sheriff's Watch," a four-part series on deaths in Massachusetts county jails by WBUR's investigative unit. The team, composed at the time of Christine Willmsen and Beth Healy, found that people suffering from dire medical conditions in state jails were often ignored and mistrusted.

The companion Alexa skill we produced allows the listener to hear all four of those 10-minute stories — as well as three shorter, skill-exclusive "extra" segments — on demand, using just a few simple voice commands.

Reducing Friction Between Listener And Content

The project was novel for a few reasons. But as we've mentioned above, there was one area in particular we were interested in exploring: What can WBUR do to ensure our audience can reliably and easily find this series?

Since this was the investigative team's first major project, we could have pointed broadcast listeners to podcast platforms. But the team's RSS feed — while shiny and new at the outset of the series — is not project-specific. And as a result, the four "Sheriff's Watch" stories have gradually been buried under investigative reporting on other topics. That meant advising people to find "Dying on the Sheriff's Watch" with the familiar cliche of "wherever you get your podcasts" was not the best approach. (And even if it were, it leaves folks who don't "do podcasts" out in the cold.)

There is also a very smart-looking landing page for the series. But getting from that page to listening to all four parts (in order) is not intuitive; it involves some friction.

An Alexa skill, on the other hand, afforded us far more future-proofing. The skill is solely dedicated to "Sheriff's Watch," all the time, for all time. Instead of asking the listener to go searching for a needle in the haystack, we handed them a map that led right to the needle.

Here's an example of WBUR Morning Edition host Bob Oakes promoting the skill on the radio, as a tag to one of the "Sheriff's Watch" stories that had just aired:

There are however pitfalls to creating platform-specific skills. Of course, not everyone owns an Alexa-enabled device — which meant we were limiting access to a subsection of WBUR's audience. Even if they do own one, many people are not comfortable enabling skills. And even if they are, they should be prepared to enable the "Sheriff's Watch" skill manually. (We found it nigh impossible, at least initially, to enable the skill solely through voice commands. And we should note that Quick Links for Alexa weren't yet publicly available when this skill was produced.)

Finally, we had no research on whether this — nearly an hour of intent listening about a grim topic — matched well with the smart-speaker experience. Yes, our listeners tune in to WBUR for hours at a time. But that usually involves segmented hours, show breaks, much shorter stories and more passive listening.

Interstitials And Skill-Specific 'Extras'

The "Sheriff's Watch" skill stands out because, after initially invoking the skill, you won't again hear the Alexa voice that so many of us are accustomed to hearing. Instead, you immediately hear from Christine and Beth — who in addition to being the primary reporters also wear the hat of in-skill docent, guiding the listener from one story to the next.

Incorporating Christine and Beth's voices in this way was a deliberate decision. (Fortunately, they were game to do it.) Given the reporting's gravity and subject matter, it just didn't feel right editorially to hear Alexa speak. It also didn't align with the series' extremely high production value. (As news outlets continue to explore the intersection of journalism and new technology, internal debates about the virtue of using a voice assistant will only continue.)

Although, creating this more immersive experience came with some unique production considerations. It also served as a educational process for future voice-skill development for editorial projects.

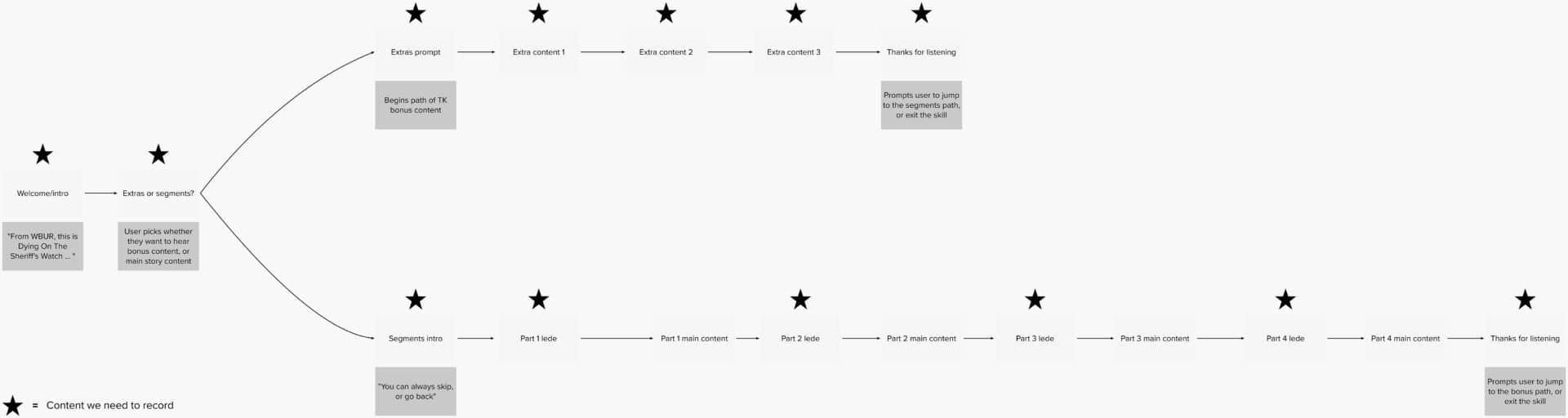

We began by conceptualizing a basic "flow" — diagramming how one could progress through the experience — which would up looking roughly like this:

Upon opening the skill, listeners will hear an introduction to the series. They are then given a choice: Would you like to hear the stories, or the extras? While we had the audio for all four stories at the ready, all the connective tissue — welcoming the user to the skill, asking them what they would like to hear, saying goodbye, etc. — had to be scripted and recorded. In addition to that bespoke tracking, we produced three skill-exclusive segments (in any major production, great material always lands on the cutting-room floor). These "extra" pieces allowed us to make the most of Christine and Beth's expansive reporting, and (we hope) enticed listeners to take the skill for a spin.

We also had to prompt the user with the voice commands they had access to within the skill, if they wanted to jump ahead ("Alexa, skip") or go back ("Alexa, previous"). That all led to an afternoon in the studio with Christine, Beth and series editor Dan Mauzy, where we worked our way through those small snippets of tracking. Needless to say, it was a first for all of us.

Later in 2020, Project CITRUS built another news-focused Alexa skill in partnership with Earplay, a Boston-based company that provides technology and services for interactive audio creators and publishers to build, deploy and manage voice experiences. We love working with Earplay's toolset and originally planned to use it for this inaugural voice project. Unfortunately, that wasn't possible with this skill.

The normal Alexa skill is modeled around a much more active back and forth with the user, and thus caps audio clips at four minutes (formerly a mere 90 seconds). Most of these stories clock in at over 10 minutes, so we had build this skill from "scratch," using Alexa's AudioPlayer interface. Fortunately, the Alexa Skills Kit provides a fairly decent graphical interface for building out the framework for your skill. And once you understand the basic mechanics and vocabulary — an utterance is what the users says, an intent is what the user (probably) wants — development is pretty straightforward. More important, it integrates seamlessly with other AWS tools like Lambda, which provides the brains of the skill. (E.g., "Sounds like the listener wants the third story. What's the URL for the MP3?")

Talk To WBUR, And WBUR Talks Back

Being able to have a conversational experience with WBUR reporters is, well, it's just plain cool. It's among the most engaging capabilities voice offers as a medium, so we were excited to see the vision pan out.

It also got us thinking about more intricate skills that leverage the technology even further by branching off into even more directions, and making listeners feel like they're deeply involved in what's happening. That kind of active engagement could build stronger relationships with our audience, and leave people feeling like they've grasped the content even better than if they had merely tapped a play button in a podcast app. And of course, we hope we also got the station thinking a bit more about on-demand audio when promoting series on the radio.

Finally, while we have no proof it happened, someone listening to our FM signal on an Alexa-enabled device could have heard that promo, enabled the skill and quickly branched off into our immersive feature-series experience. Yes, it's clunky and labor-intensive. But it is at a finger pointing to a blueprint of some form of interactive radio.

If this post piqued your interest, check out the skill for yourself — and tell us what you think.